As always, all my past editions (including this one) of Learning in the Loo can be found here.

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

As always, all my past editions (including this one) of Learning in the Loo can be found here.

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

Deanna is an Itinerant Teacher of Assistive Technology in our board and sends out a weekly tip to staff. I have turned her latest tip into an issue of Learning in the Loo!

As always, all my past editions (including this one) of Learning in the Loo can be found here.

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

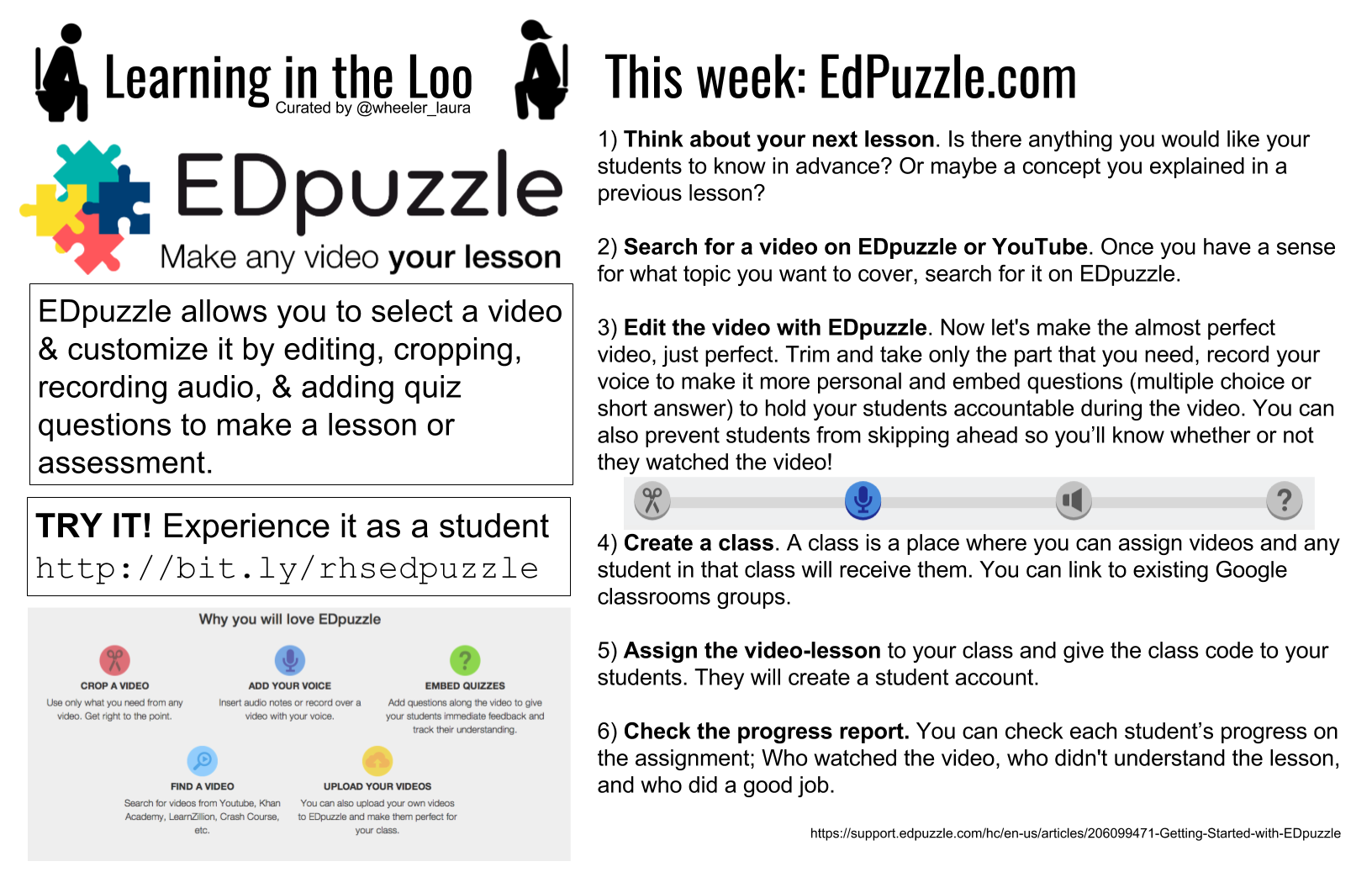

Earlier this year I was listening to an episode of the This Week in Ontario EduBlogs podcast & they were sharing a blog post by EduGals about 10 tools for curating instructional videos. I thought it would make a good Learning in the Loo poster & decided to focus on 3 tools that would allow teachers to curate videos and have students respond in turn. So I chose to share about EdPuzzle, Google Forms/Quiz, and Pear Deck. When talking about Pear Deck I focused on Google slides since we’re a Google board, but it works with Powerpoint slides as well.

As always, all my past editions (including this one) of Learning in the Loo can be found here.

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

This week’s Learning In the Loo is a remix & continuation of this blog post I wrote a couple of months ago:

As always, all my past editions (including this one) of Learning in the Loo can be found here.

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

Here in Ontario we have to report on learning skills at report card time. And even more so when virtual compared to face to face, some of the things we’re meant to assess in learning skills are not always visible to me. So I like to ask my students to complete a self-reflection about their learning skills in my class using a rubric I created years back from the growing success document.

Here are the learning skills criteria outlined in Growing Success (page 17):

So I took that doc and created a rubric with the 4 levels of achievement students see for learning skills on their report card; Excellent, Good, Satisfactory & Needs Improvement.

I ask students to read it over and choose one description for row that best describes their work habits in our course. This is useful feedback to me because sometimes I don’t realise, for example, how much help a student is seeking outside of class time to persevere with the course material (tutoring, homework club, etc.).

Then on the back (I do this as a paper task when we’re face to face for in-school instruction) I ask students to review their evidence record that I’ve emailed them and tell me what level they think they’re currently achieving in the course, as well as which expectations are strong or weak:

This accomplishes a few things:

The last section asks students to reflect on certain aspects of the course that help provide me with feedback for the next semester (or quadmester now). These can obviously be adapted to match the work done in your class as well as the criteria you’re interested to get feedback on:

When we teach face to face I print this document up on legal size paper & give one to each student to fill out & write their name at the top. When virtual I assign it in Google Classroom choosing to make a copy for each student which automatically puts their name in the title. If you want anonymous feedback for that last part to ensure they’re willing to give you the honest goods, you can turn that last set of questions into a Google Form that doesn’t collect identifying info instead.

As always, here’s the whole document (the virtual teaching version – find the face to face version in the Version History) so you can make a copy & edit as you see fit. Are there reflection questions you love asking at the end of a course that you don’t see here? I’d love to hear what they are in the comments below!

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

This year is tough. No doubt about it. Colleagues are having conversations around different ways to assess in the virtual online teaching environment in order to ensure students aren’t cheating by, for example, using an app like Photomath to solve equations for them. And sometimes when you look for more open-ended prompts that allow for variety & no one exact solution, it winds up taking longer to mark. So I thought this question from Sabrina on Twitter was timely:

Have a look through all the replies but I thought I would feature a few that stood out to me here. Love this idea from Karen about giving herself a timeline for returning work, graded or not. It happened so often that I trucked piles of marking home, night after night, to wind up not even taking it out of the bag because “I’ll do it tomorrow before class when I get to school & am fresh with renewed energy & motivation”.

Remembering that here in Ontario our final grade is meant to be based on observation, conversation and product; all three. Most of us tend to rely on too much product, me included. It’s tough to get a recorded level written down based on observation & conversations because they happen in the moment & you don’t necessarily have the time to stop & record a level on a checklist. But if you can’t do it in real time, a chunk of time after class is over to use some well-planned observational rubrics, like Meaghan suggests here, and record levels of achievement or anecdotal notes based on your observations & conversations from that class can be helpful:

I appreciated this response from Krista because I could really see myself in it. My style is to mark an entire batch at once. But as Krista points out, it’s hard to carve out that magical block of time to do that. She suggests chunking down to smaller sets. For me, that involved marking an entire set of 1 question. Then coming back to mark the entire set of question #2, etc.

If you’re like me and watch a lot of videos on YouTube (or listen to podcasts) at a speed of 1.25 up to 2 times faster than recorded, then this suggestion from Michael might appeal to you. Have small group Meets & record them. You could open several meets & move between to supervise after hitting record in each. Then rewatch them at a later date but sped up (click the settings cog along the bottom of the video saved to Drive & choose Playback Speed to adjust as desired):

In my first practicum in a grade 8 science classroom I planned a ton of hands-on labs because what’s better than hands-on science, right? My associate teacher smiled and asked if I planned to mark each of the lab reports? Of course, I said! Oh man!!! Her smile should have told me she knew something I didn’t. All those labs are sooooo much work to read through & mark. So I love this suggestion from Audra about only asking for certain sections of the lab report for each lab. This strategy could be applied to other assignment styles beyond science lab reports too:

Another strategy I’ve played with over the years is audio feedback. For a while I was uploading images of student Math tests into Explain Everything & posting a personal video to each student with their feedback as I circled and pointed to that part of their work in the image on the screen. I don’t know if it saved me time or if I spent the same amount of time giving more detailed and informative feedback, but either one is a win. So I liked this reminder from Melanie about audio feedback:

Have you got tips for being a more efficient marker/grader? How to give better or more detailed feedback in the same time or less? Leave a comment below – I’d love to hear your strategies!

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

My grade 10 applied class this year has some students with some serious gaps in their math abilities/knowledge. We had our first test last week (which is late – about 5 weeks in – too many interruptions to class so far; assemblies, etc). For the first time I tried Howie Hua’s strategy with my class:

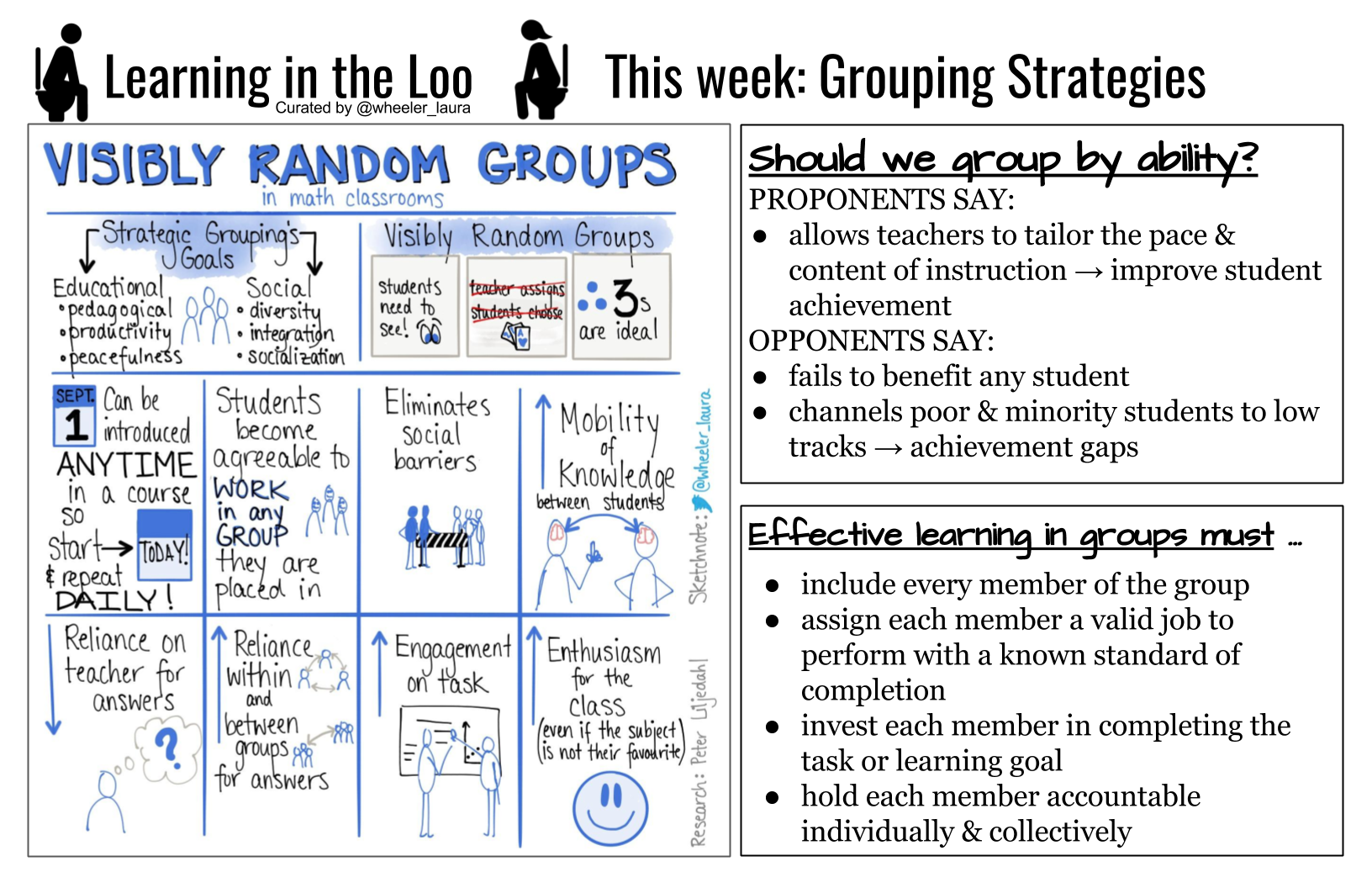

I asked my Tweeps if they do VRG for this or let students choose. Almost everyone said they let students choose. I may try VRG next time as there were a couple of students who didn’t get up to talk to anyone. I’ll be asking them for feedback today about how they thought that helped them (or whether or not it did).

Unfortunately on test day due to an assembly running long that morning, they took 10 minutes away from my period. A number of students had trouble finishing. I struggle with that b/c I think many of them want more time, but simply spend the time staring at the page, not being productive in solving. This class is mostly ELLs thought (more than usual) and in the past when that’s been the case & I have slower test takers I have made shorter more frequent tests.

So normally I test ever 2 to 3 weeks once we’ve done activities & practice that cover 4 or 5 of the 9 overall expectations for the course. Then the test is 2 pages double sided, each side of a page is 1 overall expectation (usually one or two problem solving tasks). In the past I’ve changed that to testing every 1 to 1.5 weeks on 2 of the 9 expectations instead. I think that’s what I’ll need to do here so that if a student needs more time they can have it within that class period.

I haven’t yet returned their marked tests (I put feedback only on the test & they receive their grade separately a day later on their evidence record via email; research shows that mark + feedback results in students caring only about the mark, not the feedback). Yesterday I sketched on the board the same triangle based prism they’d had in a Toblerone bar question on the test but with different dimensions. I asked them to find surface area & volume (dimensions were such that they needed to use Pythagorean Theorem to find the height of the triangular base). Most groups took almost the entire period to solve this!!! One group never got beyond the Pythagorean Theorem part. I ran around like a chicken with my head cut off trying to facilitate, correct misconceptions, etc.

As an aside: A colleague came by to watch (said he’s been meaning to for a while now) and I had to ask him not to write on the students’ boards or tell them how to do the next step. Reminded me how hard it is to teach other teachers the skill of not telling students the answers always, but asking questions that help them figure it out for themselves. He said “but they’re nodding so they understand what I’m showing them”. I explained I want them doing the math, not him. I asked him to talk with them but don’t do the math for them.

I also got a short video of the groups getting started on the problem if you’re interested:

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

I first wrote about how I was using Khan Academy with my students in late 2014 here. Then earlier this school year I wrote a response to another blogger’s post about why online Math practice tools aren’t good, here. Since that first 2014 post, Khan Academy has changed & improved quite a bit and so has how I use it with my students. So let me share a little of my Khan Academy pedagogy.

From here on out, KA = Khan Academy

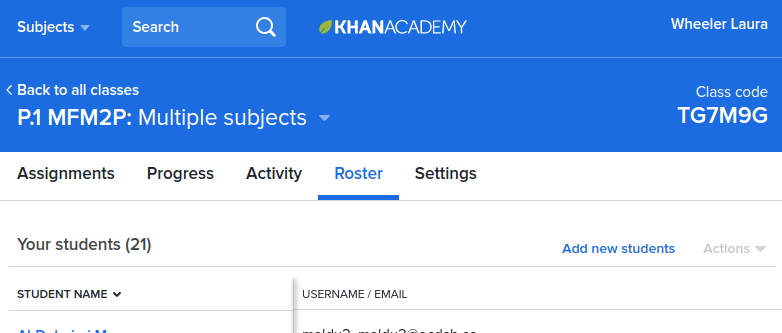

Students and teachers can use KA anytime they like without having an account or without joining a teacher’s KA class. However, by making an account, the student’s progress gets tracked & saved in KA, allowing the site to better offer next steps of Math for them to work on. And by joining the teacher’s class, the teacher has the ability to assign practice problems & check student progress. I highly recommend using it as a class like this.

Step 1: Create a class

If you haven’t yet, make a KA account yourself. I often log in from my chromebook & so I love the simplicity of the red Google button that automatically logs me in using my school board Google account.

Then head to your “dashboard” https://www.khanacademy.org/coach/dashboard & click on “add new class” (on the right side). Enter information for your class – I like to name it by period & course code – or choose the “import from Google Classroom” option if you already have all your students connected to Google Classroom.

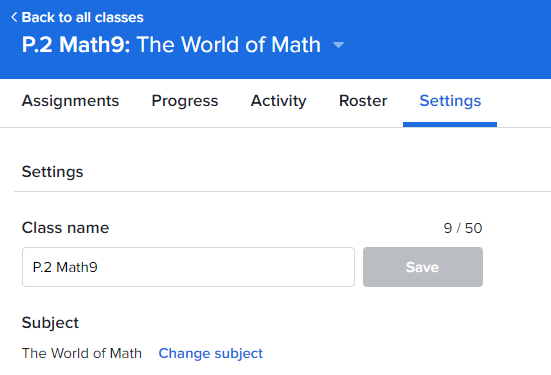

You will be prompted to tell KA what Math subjects your students are learning. I choose “World of Math”.

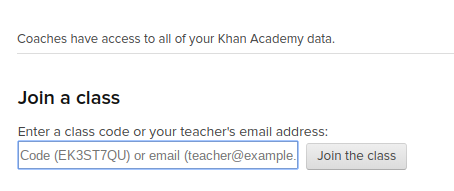

Step 2: Get students in your KA class

Two ways to do this:

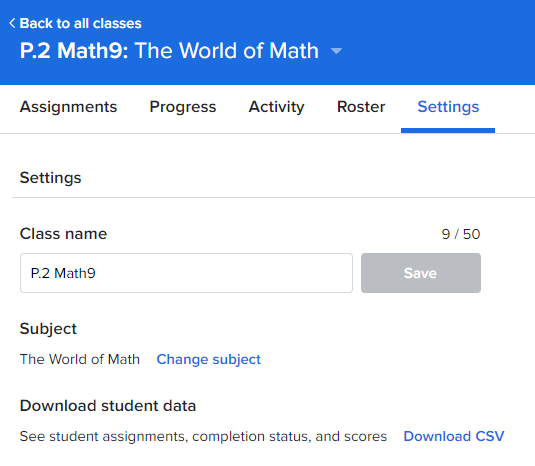

If at any time you’d like to change the name of your class or change which subjects you attributed to the class at the beginning, you can click on the class name in your dashboard & then choose Settings:

Step 3: Find content & Assign it

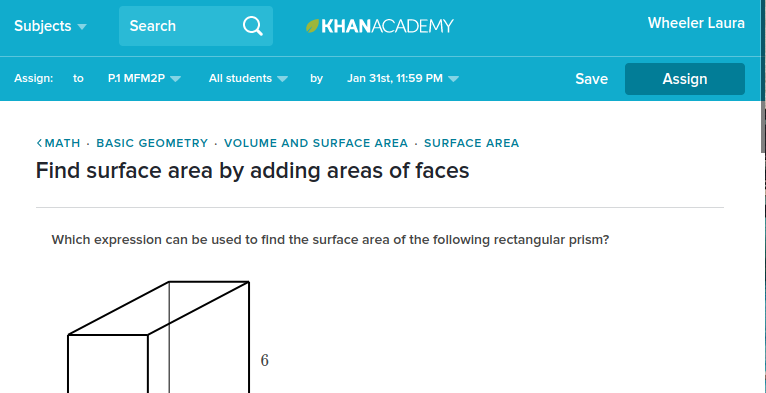

So let’s say that today we did a 3 act math task or problem-based learning activity involving surface area & tomorrow I want my students to do some individual practice on surface area. I use the search bar at the top of KA to search for that topic. I click on “Exercises” to filter it so that I only see the practice sets:

Once you click through to the exercise set, along the top you have the “assign” options. You can choose to assign the set to one or more of your classes. By default “All students” is chosen but you can click the drop down in order to assign to only some of your students if you like (useful for differentiation). Choose the due date & time (students can complete it after the due date still but it will notify them that it’s overdue. When ready, click Assign.

Step 4: Students do the practice set

I provide class time to practice independently on KA after each activity we do in groups. What they can’t get finished in the provided class time becomes homework to complete at home.

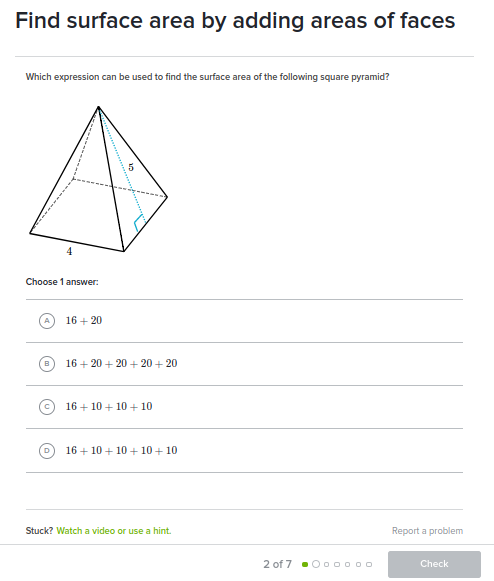

Students log in to the website (khanacademy.org/login) or download the app & sign in there. The assigned work will be on their dashboard in a list of assignments to do. They click “Start” next to the assignment title. I have my students work on paper so that if they get stuck they have a trace of their thinking so far for me to help them find their error or sticking point. Once they have an answer, they choose the answer (if it’s multiple choice) or type it in (paying attention to how KA wants it submitted; rounded to the hundredth or as a precise fraction instead of a rounded answer). They click “Check” and KA either tells them they are correct, or incorrect & try again.

If students are stuck they have the option near the bottom of the screen to watch a video or use a hint. The KA videos are pretty traditional teaching and often involve tricks like FOIL. But they are better than no help at all when a kid is at home & stuck on a problem. Hints are literally the next step in the problem given to them. They can keep pressing hint until the whole solution is shown & explained. But using any hint results in the student being allowed to finish the question, but not have it count as successful. I believe KA’s recent changes mean students need to get 70% of the problem set correct to be considered “practiced” on that skill.

Step 4: Checking their work

KA tells students immediately if they get a question right or wrong. Students cannot move to the next problem until they’ve entered the correct answer for the current one. So students get immediate basic feedback about right or wrong.

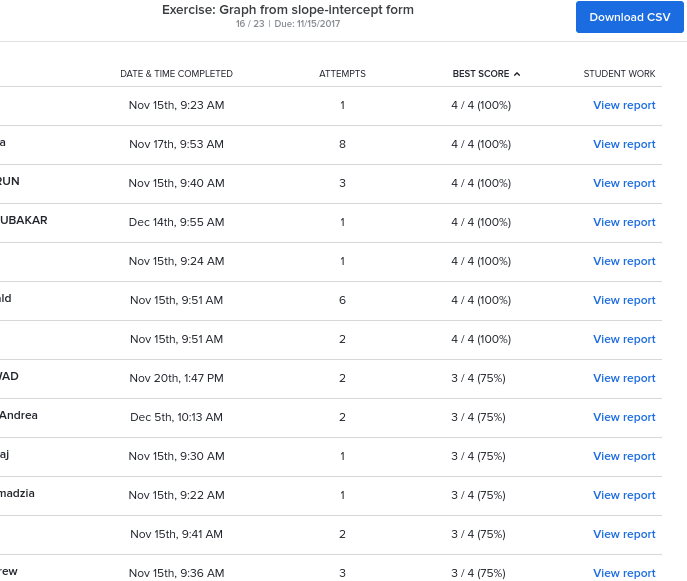

From the dashboard for a given class you can see the current assignments as well as past ones (whose due date is past). If you click on the number of students that have completed an exercise next to its title (ex. 3/15), you can see a list of students and their scores. You can sort by date, number of attempts, score, etc. This can also be downloaded as a CSV file (which opens as a spreadsheet in Google Sheets or Excel or similar programs).

If you click on View Report next to the assignment title (not the one next to each student in the above image), you can see which questions the students had the most trouble with:

Step 5: Assigning further homework & Differentiating

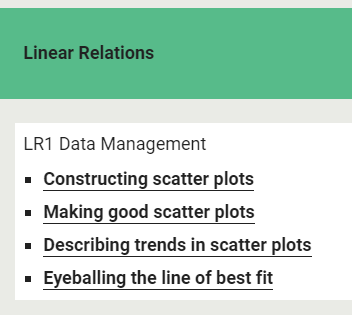

I have created a list of all the KA exercises that meet the curriculum needs for each course I teach, divided by overall expectation. You can see an example here:

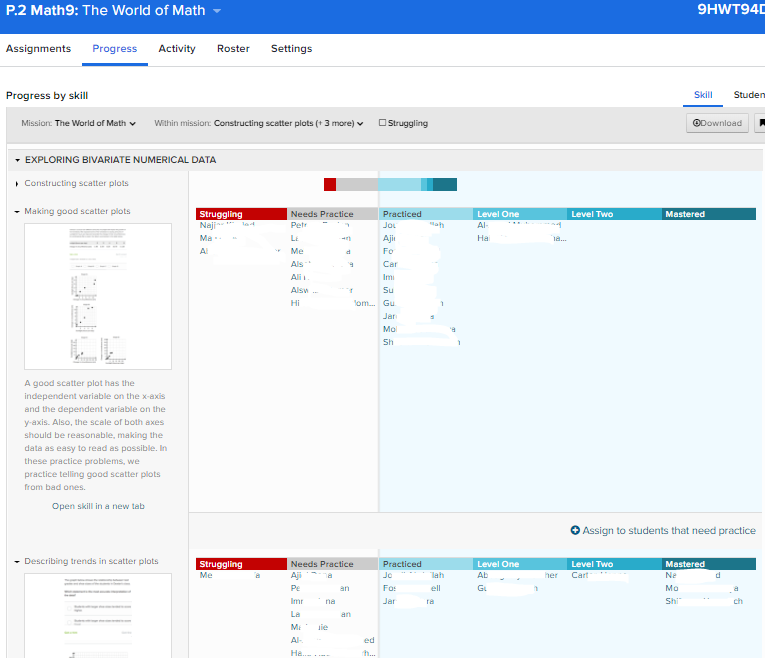

I list the practice from easiest to hardest (or in the order in which we will study it). When I am assigning the 2nd or 3rd practice set from that list to my students, I will use the “Progress” tab for each class & I will click on “Within mission” in the top gray bar & search for the list of practice sets for that expectation (you can see this screen below). Any student that is still in the “needs practice” or “struggling” column will be reassigned the first homework. Any student that is “practiced” or above on the first homework gets assigned the 2nd homework, and so on until each student has been assigned the next practice set for them to work on according to their completed work to that point. I find this helpful to not overwhelm students with a practice set they are not yet ready to tackle individually.

Step 6: Mastery

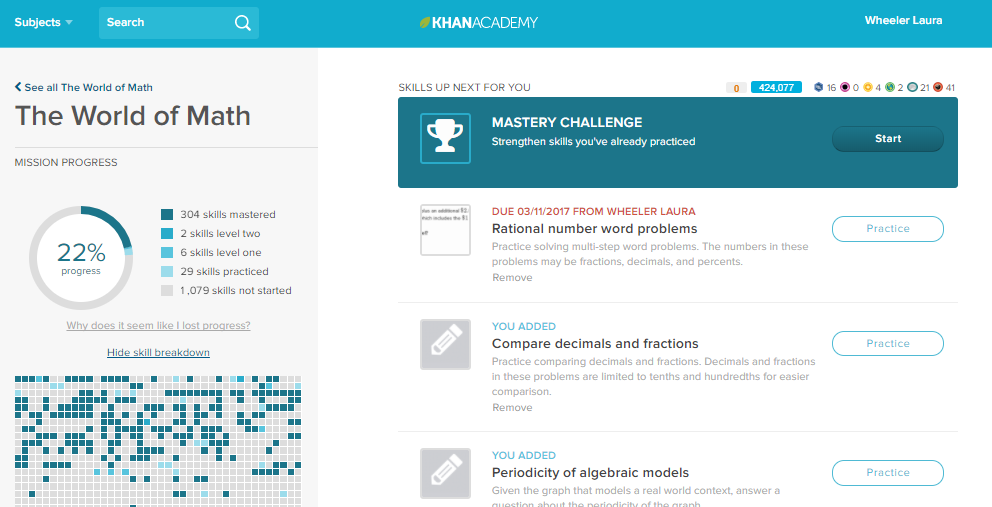

When students are done all of their assigned practice early, they have three options:

Step 7: Using the spreadsheet of data

There are two main things I do with the data that KA provides for me; communicate with parents & guardians as to their child’s progress on KA skills and use it as backup evidence at the end of a semester when I am determining whether or not a student has shown sufficient achievement of an overall expectation for the course.

On the “settings” tab of each class, you can download the student data as a CSV file:

I save that CSV file to my Google Drive & open it in Google Sheets. I move & hide columns to meet my needs. I use the IFERROR formula to compute their best score yet (not the score at the due date) for each practice set & display “incomplete” if there’s no score. I sort by student and copy & paste their table of data into an email to them & their parents.

I try to do this every few weeks. I download a new .CSV file each time so I have their up to date best score to honour when they go back & try again or do mastery to level up. This is purely for feedback to them & their parents at this point.

At the end of the semester I do a final spreadsheet where I do one extra step: I sort the exercises by curricular expectation, for each student. When determining a final grade or whether or not to grant the credit, this can serve as backup evidence of their skills in the case where they have difficulty with the more complicated problem solving on our formal evaluations.

PHEW! I think that’s about it.

Have you used Khan Academy? How do you use it with your classes? Let us know in the comments below!

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

Inspired by this tweet …

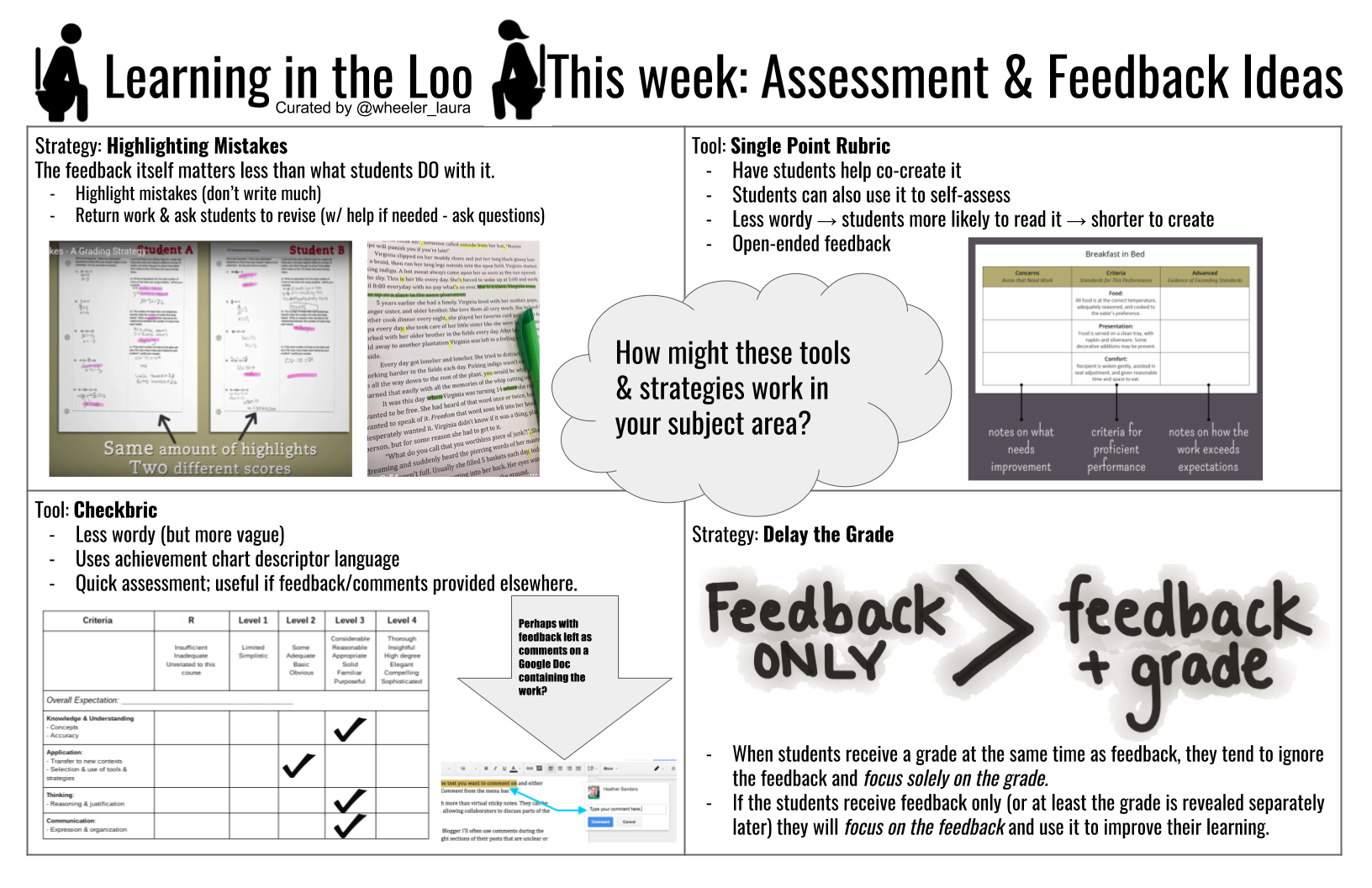

I asked my PLN to share their strategies for getting students to take action on the feedback we leave them on their work:

Their responses are compiled in my latests edition of Learning in the Loo:

The archive of my past editions can be found here in case you want to put some up in the bathrooms of your school too!

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)

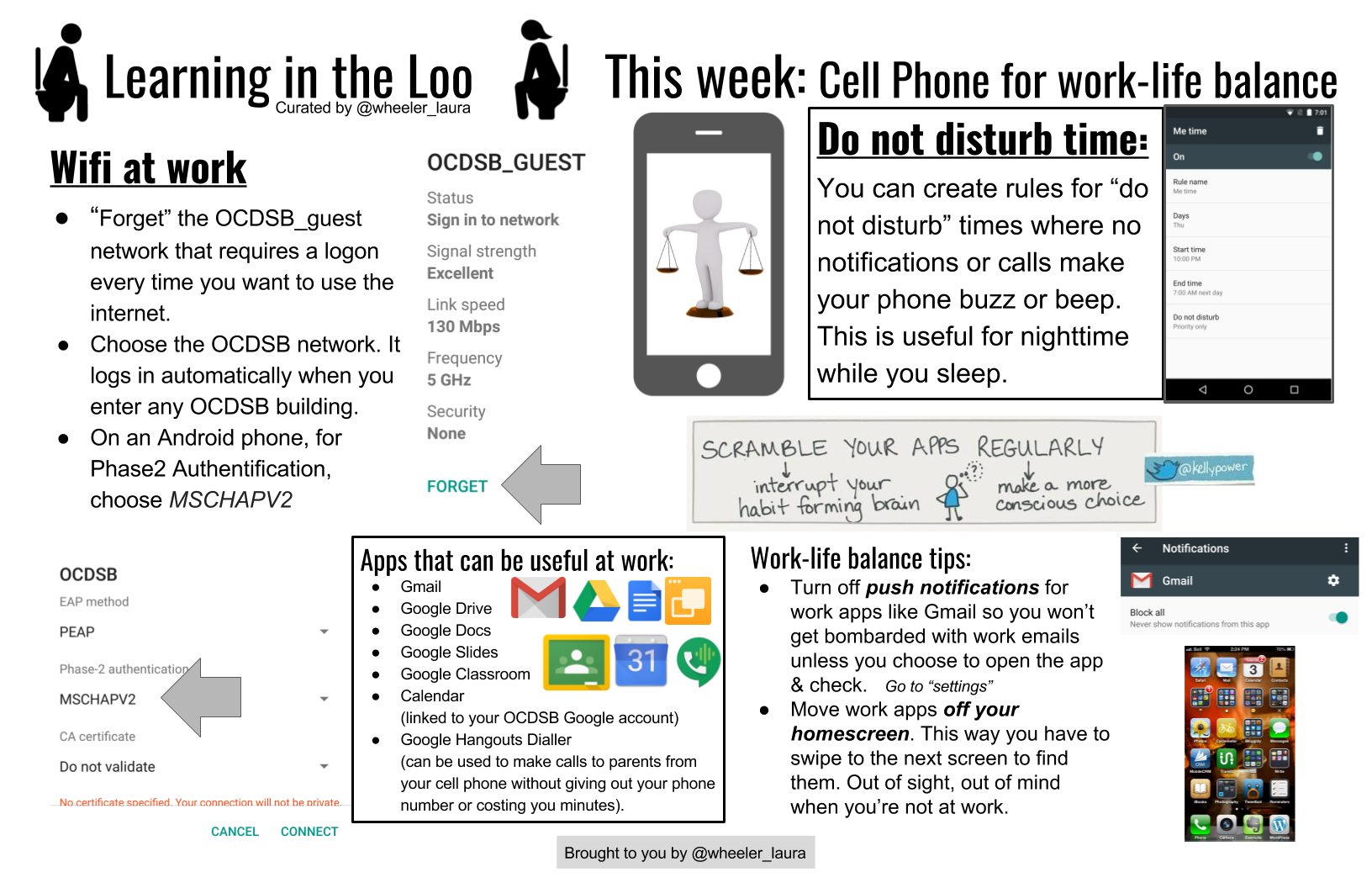

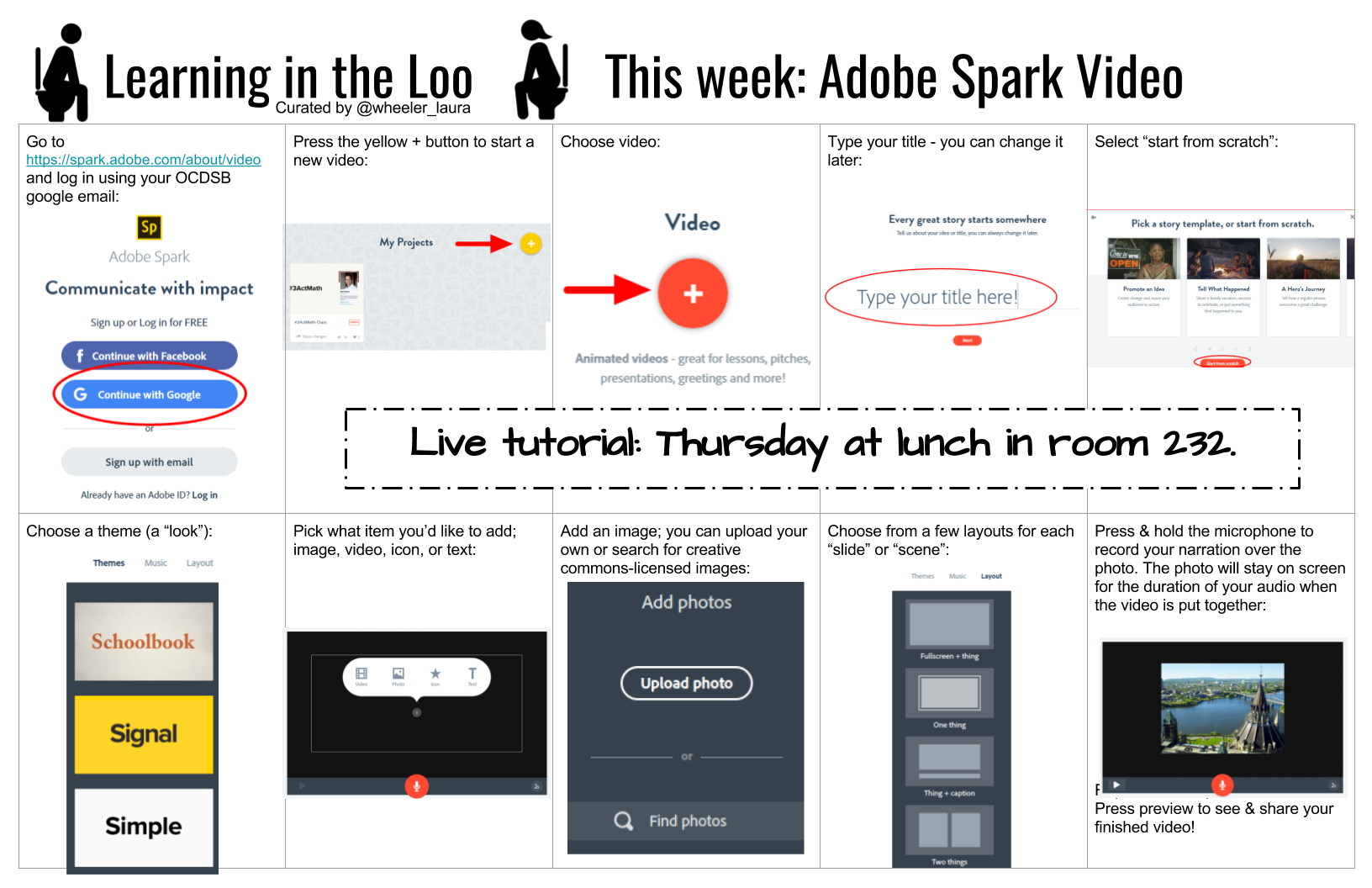

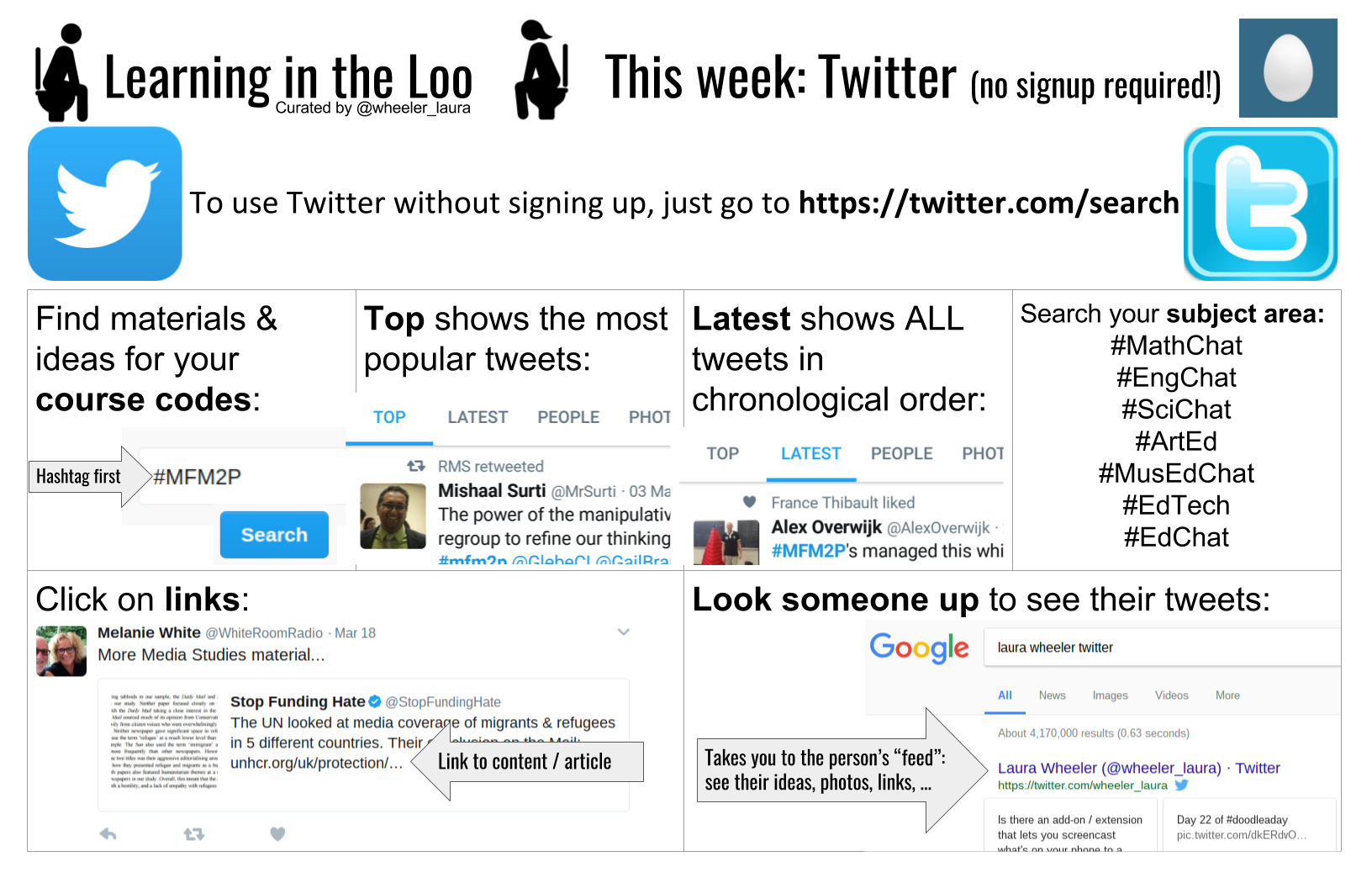

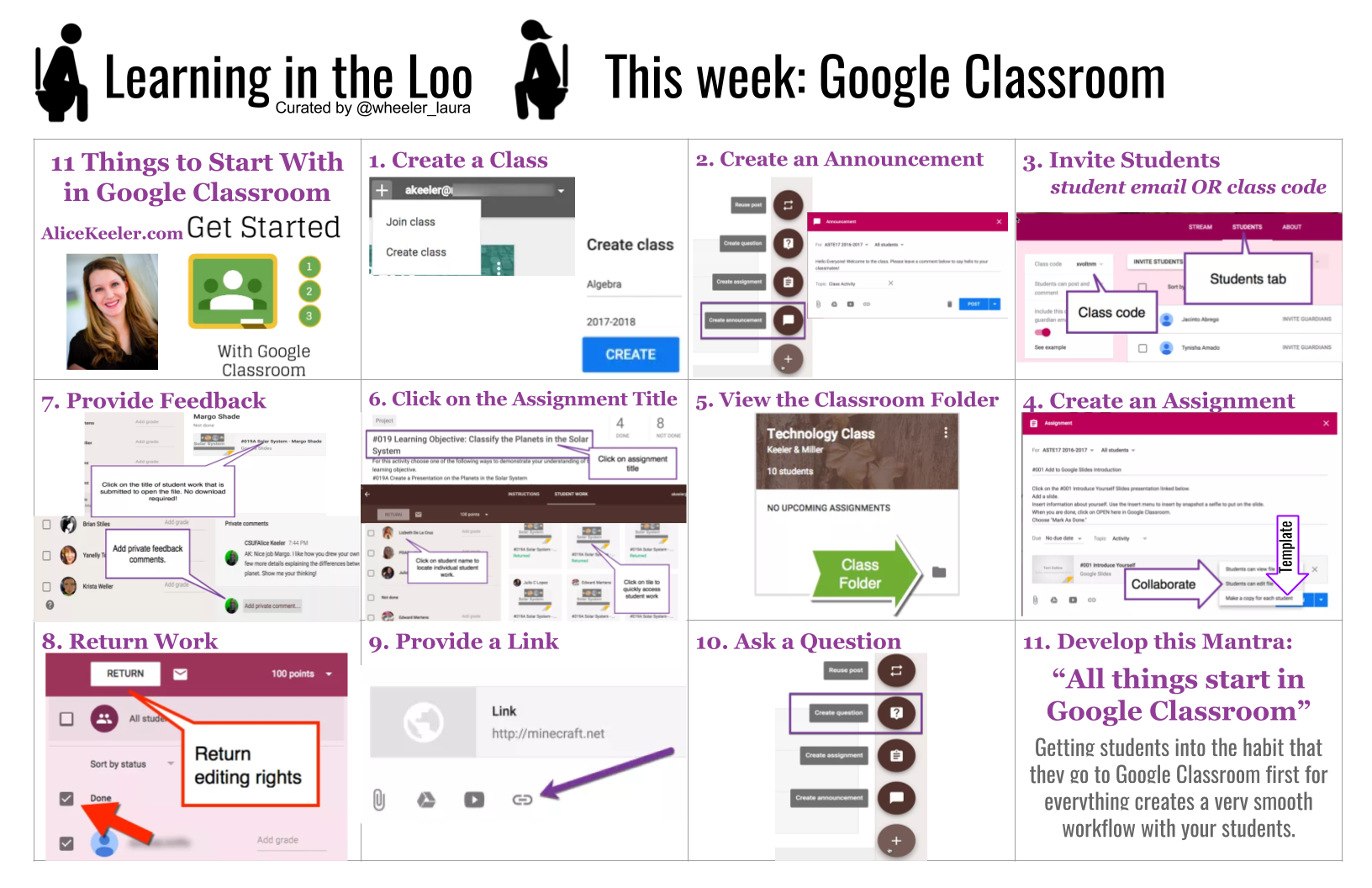

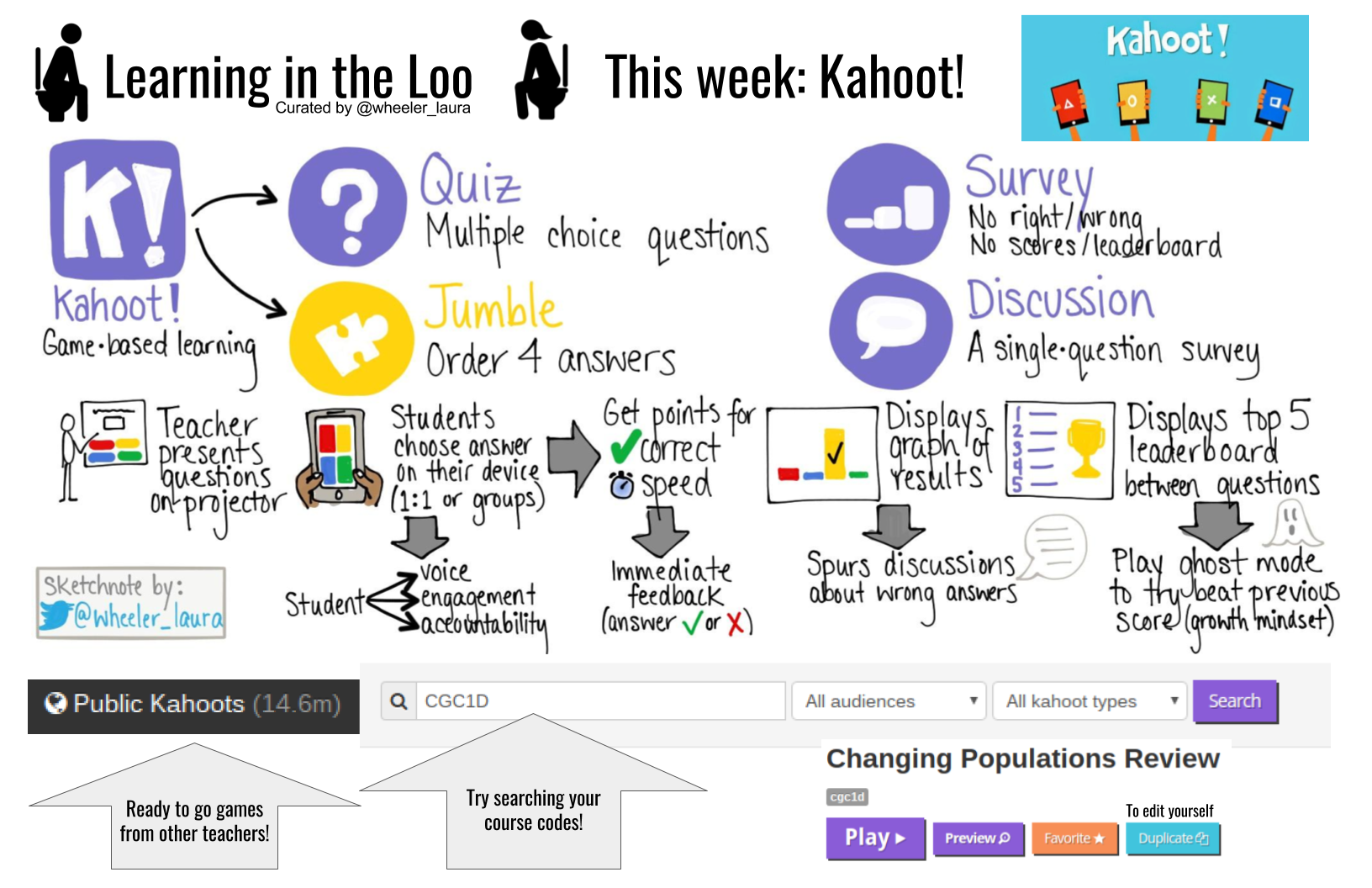

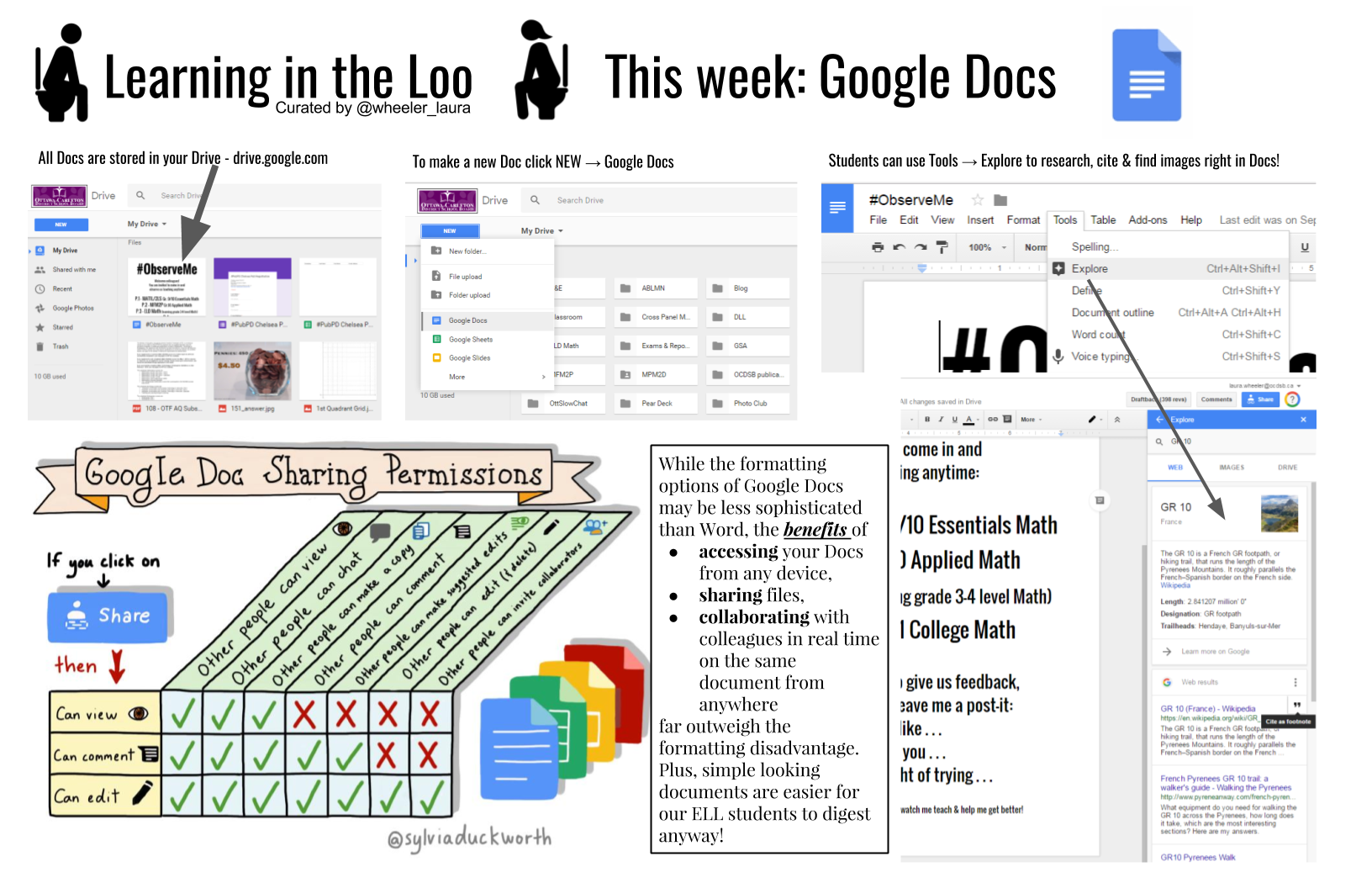

Do you ever read a great article or blog post and think I HAVE to share this with my colleagues! So you email everybody the link & say you have to read this. And then maybe 1 or 2 people actually read it?

I find so many great things on Twitter & blogs (#MTBoS) that I want to share with my colleagues, but they often don’t have (or make) the time to check them out. So when I happened upon a tweet about Learning in the Loo I thought it was genius – a captive audience!

So I have made it a habit to create & post a new Learning in the Loo 11×17″ poster in each staff toilet in our school every 1 or 2 weeks this semester. I curate the amazing things I learn about online & turn them into quick read how-tos or ideas to read while you … “go”. And it just occured to me that I should have been posting them to my blog as I made them. But now you can get a whole whack of them at once and next year I’ll try to remember to post them as I make them.

The whole collection so far can be found here with printing instructions.

Feel free to make a copy (File –> make a copy). Also the sources of images & ideas are in the notes of the doc above too.

Here they are:

What would you share in your school’s first Learning In The Loo poster?

– Laura Wheeler (Teacher @ Ridgemont High School, OCDSB; Ottawa, ON)